What is SEO?

SEO (Search Engine Optimization) is the marketing process to increase visibility within the organic search results, for the specific purpose of obtaining more traffic for a website.

How SEO Works

Every major search engine such as Google, Bing, and Yahoo provides a listing of websites that are deemed to best answer a search user’s query. Those listings are called search results. Within those search results you will find paid listings, but the bulk of the results are made up of what are called organic listings. The organic results are the free listings that the search engines have found, through their analysis, to be the most relevant to the user’s query.

Being listed at the top in the organic listings is at the root of why SEO is so important. Organic results currently receive a significantly greater share of traffic in comparison to paid ads. So, if your business isn’t being found in the organic listings, you are missing out on a large percentage of the consumer market.

Getting to the top of the results is where search engine optimization comes in. There are numerous strategies and techniques that go into creating a relevant and optimized website to truly compete for the keywords that can generate revenue for your business.

See Also: Why is SEO Important?

How to Get Started with SEO

Basic SEO isn’t hard to get started with, it just takes time and plenty of patience. Web pages don’t typically rank overnight for competitive keywords. If you are willing to create a solid foundation of optimization for your website, and put in the time to build upon it, you will achieve success and grow your organic traffic. Businesses that cut corners may achieve short-term results, but they almost always fail in the long term and it is very difficult for them to recover.

So how do you go about optimizing your website? You must first understand how the search engines work as well as the various techniques that make up SEO. To make it easier to navigate, we have linked each section below so that you can jump to the section that interests you.

How Does Google Rank Websites?

The search engines use automated robots, also known as web crawlers or spiders, to scan and catalog web pages, PDFs, image files, etc. for possible inclusion in their massive indexes. From there, each web page and file is evaluated by programs called algorithms that determine if a file offers enough unique value to be included into the index.

If a file is deemed valuable enough to be added into the index, the file or web page will only be displayed in search results that the algorithms have determined are relevant and meets the intent of the user’s query. This is the true secret to the success of the search engines. The better they answer a user’s queries, the more likely it is that the user will use them in the future. It is generally accepted, as evidenced by the sheer number of their users, that Google delivers more relevant results because of their highly sophisticated algorithms that they are constantly improving. In an effort to maintain their competitive advantage, it is believed that Google makes 500-600 updates to their algorithm each year.

User intent is playing a greater role in how search engines rank web pages. For example, if a user is searching for “SEO companies,” is the user looking for articles on how to start an SEO company, or are they looking for a listing of SEO companies that provide the service? In this case, the latter is more likely. Even though there is a small chance that the user may be looking to start an SEO business, Google (with their expansive data on user habits) understands that the vast majority will be expecting to see a listing of companies. All of this is built into Google’s algorithm.

Search Engine Ranking Factors

As stated previously, these algorithms are highly sophisticated, so there are numerous factors that these programs are considering to determine relevance. In addition, Google and the other search engines work very hard to prevent businesses from “gaming” the system and manipulating the results.

So how many ranking factors are there? Well, back on May 10, 2006, Google’s Matt Cutts declared that there are over 200 search ranking signals that Google considers.

Then, in 2010, Matt Cutts stated that those 200+ search ranking factors each had up to 50 variations. That would bring the actual number to over 1,000 unique signals. Even though there are so many signals, they don’t each carry an equal amount of weight.

For websites wanting to rank for nationally competitive keywords, the signals that matter most are related to on-page, off-page, and penalty-related factors. On-Page optimization is related to content, and for a website to have a chance to rank for competitive search phrases, the content needs to be very relevant and answer the search user’s query. Off-page factors are related to the link popularity of the website and how authoritative external sources find your content. As for the penalty-related factors, if you are caught violating Google’s Webmaster Guidelines, you are not going to be competitive at all.

For websites that are looking to rank in their local market, the factors are very similar except for the addition of Google My Business, local listings, and reviews. Google My Business and citations help verify the actual location of the business and its service area. Reviews assist in determining the popularity of that local business.

On-Page SEO

On-page SEO is the optimization of the HTML code and content of the website as opposed to off-page SEO, which pertains to links and other external signals pointing to the website.

The overall goal behind on-page SEO is to make sure that the search engines can find your content, and that the content on the web pages is optimized to rank for the thematically relevant keywords that you would like to target. You have to go about things the right way, however. Each page should have its own unique theme. You should not be forcing optimization onto a web page. If the keywords don’t match the theme of your product or service, you may just need to create a different page just for that particular set of keywords.

If you optimize the title, description, and headings while creating content appropriately, the end user will have a significantly better experience on your website as they will have found the content that they were expecting to find. If you misrepresent the content in the title tag and description, it will lead to higher bounce rates on your website as visitors will leave quickly when they aren’t finding what they expect.

That is the high level overview of on-page SEO. There are several key topics regarding on-page optimization that we are going to cover including HTML coding, keyword research, and content optimization. These are foundational items that need to be addressed to have a chance at higher rankings in the search engines.

Website Audit

If your website is having difficulty ranking, you may want to start with an SEO audit. You need a 360-degree analysis to identify your website’s shortcomings so that you can correct them and put your site back on a more solid foundation. An audit can help identify glitches or deficiencies, both on and off page, that may be holding your website back from ranking for your targeted keywords.

There are SEO crawling tools that can help you identify basic technical issues fairly easily, making that part of the auditing process much more efficient. An audit typically would not stop at a technical analysis. Content and the link profile of a website also play a significant role in the ranking ability of a website. If you don’t have quality content or authoritative links, it will be difficult to rank for highly competitive search phrases.

See Also: Technical SEO Audit Process + Checklist

HTTPS Security

Google, in an effort to create a safer and more secure web experience for their search users, has been pressing webmasters to secure their websites. Safe experiences are important for Google’s search engine. If search users have confidence in the safety of the results being displayed, they will be more likely to continue using Google’s search engine in the future.

While Google might have a good reason to encourage webmasters to secure their sites, webmasters have just as much incentive. When people are purchasing online, potential customers are going to feel much more confident completing a transaction with a site that is secure versus one that isn’t.

To strongly encourage webmasters to secure their websites, Google has integrated SSL as a search ranking factor. Websites that do have SSL certificates will be favored slightly above those that do not. It isn’t a big factor, but enough that if all other factors are equal, the site that is secured will win out.

Mobile-Friendly Design

Over 60% of all search queries are conducted on a mobile device now. Google is working to create the best possible search experience; therefore, they need to give preference to websites that are improving their mobile usability.

Providing a better mobile experience can be done in several ways. The most common method is that webmasters are creating responsive designs that will adjust based on the size of the browser that you are viewing from. Other sites have a mobile-specific version of their web page that is displayed when the server detects that the user is utilizing a mobile browser.

The speed at which mobile pages load is also important. Google backs a project called Accelerated Mobile Pages (AMP) and, in 2016, they integrated AMP listings into their mobile search results.

Taking into consideration that the majority of searches are now mobile based, Google is creating a mobile-first index. This will become Google’s primary index of web pages, and it has been rolling out slowly since the end of 2017. Should you be concerned if your site isn’t mobile friendly? Maybe not in the short term, but in the coming months, yes. Websites that provide a good mobile experience will have a greater ability to rank higher in the search results. This isn’t just Google, however. Bing is moving in this direction as well.

See Also: How Google’s Mobile-First Index Works

Website Page Speed

Page speed is the length of time it takes for a web page to load. With Google’s mobile-first index, page speed is going to play a greater role as a ranking factor.

Once again, it is about the user’s experience. Visitors to a website just don’t want to wait for pages to load. The longer it takes for your web page to load, the greater the chance that the visitor will leave (bounce) and visit your competitor’s site. Google did a study and found that the difference between a 1s web page load time and a 5s time increased the odds that the visitor will bounce by 90%.

You need to pay attention to the rate at which visitors bounce off your website, as this is also a ranking factor. Not only that, those are lost revenue opportunities. Google cares about these metrics because they need to keep their search users happy with the results being displayed.

There are several things that you can do to improve page speed. Some of these items include the following:

- Enabling file compression

- Minifying CSS, Javascript, and HTML

- Reducing page redirects

- Removing render-blocking JavaScript

- Leveraging browser caching

- Improving server response time

- Using CDNs (Content Distribution Networks)

- Optimizing image file sizes

Website Crawlability

Search engine robots visit your website and make copies of each page for consideration into their index. If they deem the content valuable, they will include it in their index so that their search users can find it. That is why it is important to make sure that the search engines can easily find the pages within your website. If they can’t find your content, it can’t be included in their index for others to find.

There are technical problems that can prevent search robots from crawling your website, but most websites don’t experience these issues. One issue is that some websites use JavaScript and Flash, which can sometimes hide links from the search engines. When a robot is crawling a website, they crawl by visiting the links found within a web page. If they don’t see the link, they won’t crawl it, meaning they won’t know to consider the page for indexing. Another common crawling issue is the accidental inclusion of a “noindex” tag on a web page or the blocking of entire folders of a website from being crawled through the robots.txt file.

One way to help ensure your content is seen is by creating a sitemap.xml file. This file lists out the various URLs for a website, making it easier for the search engine robots to find the content of your website. Sitemap files are especially helpful for websites with thousands of pages, as links to some content may be buried deep within your website and harder for the search engines to find right away. As a general rule, you should update this file whenever new pages are added.

Avoiding Page & Content Duplication

Duplicate content can harm the ranking ability of some of the most important pages of your website. When Google is looking at the pages of your website and finds nearly identical content between two or more pages, they may have difficulty prioritizing which one is more important. As a result, it is very possible they will all suffer in the rankings.

Here are some unintentional, yet common causes of content duplication:

- WWW and Non-WWW versions resolving: If the website resolves for both versions instead of one redirecting to the other, Google may index both versions. Google has done a much better job about handling this, but it does remain a possibility. You can solve this issue by ensuring that you have proper 301 redirects in place.

- Pagination: This is very common ecommerce SEO issue, but does happen with others. Let’s say that you have a website that sells shoes with a category called “blue shoes.” You may sell hundreds of different “blue shoes” and you don’t want to show any more than 20 different styles at a time. In this instance, you would probably have multiple pages that you can click-through. As you click, you notice you are going to a web page with a URL of “blue-shoes/page2/”. That activity is called pagination. If not handled properly, Google may index every single page, each consisting of nearly identical content, when in reality you want the main category to be the ranking page within the search results. You can solve this problem by using rel=canonical tags.

- URL Parameters: Staying with our “blue shoes” example, you may want to see what is available in a size 12. You click to sort to find what is available in a size 12, you stay on the page, but you notice the URL now says “blue-shoes?size=12”. The “size=12” is a URL parameter. Sometimes these URL parameters are indexed. This is another very common occurrence for ecommerce websites. You can solve this by using rel=canonical tags or by handling URL parameters through Google Search Console.

Structured Data

Structured data is organized information that can help the search engines understand your website better. Through the collaboration of Google, Bing, Yandex, and Yahoo; Schema.org was created to standardize the HTML markup of this information.

While there is no conclusive proof that structured data can improve your search rankings, it can help enhance your listings, which can improve your click-through rate. Click-through rate is something that is widely accepted as a ranking factor.

The following are some enhanced listing features as a result of structured data:

- Star ratings and review data

- Knowledge graphs

- Breadcrumbs within your search result

- Inclusion in a rich snippet box for a result

Implementing structured data is also helping you prepare for how search is evolving. Search engines are trying to provide instant answers to questions, which will play an even greater role with voice search.

See Also: Understanding Google Rich Snippets

Role of Domain Names

There has long been a discussion regarding the value of having a keyword in the domain name. There was a time when there was a very strong correlation with ranking ability, but as the algorithms have evolved, that signal has diminished in importance.

That does not mean there isn’t value there, however. It simply means that you shouldn’t place a big focus on the domain name containing the keyword when there are so many signals that are more important.

SEO-Friendly URL Structures

URLs are one of the first things that a search user will notice. The structure of the URL not only has the ability to impact the click-through rates of your search listings, but can also impact your website’s search ranking ability.

- Keywords in the URL: It is a best practice to include keywords in the URL as long as it accurately represents the content of the page. This will give additional confidence to the search user that the page contains the content that they are looking for while also reinforcing the important keywords to the search engines. Going with our blue shoes example, a URL would look like this: “/blue-suede-shoes/”

See Also: How Valuable is it to Have a Keyword in Your Domain URL?

- Don’t Keyword Stuff URLs: There is something to be said for having a little too much of something. Sometimes when you have too much, it can work against you, and the same goes for keywords in a URL. When you over-optimize, search users will see a spammy-looking URL, and that could hurt your click-through rates, but the search engines could also think that you are trying to game the system. A keyword-stuffed URL would look like this: “/shoes/blue-shoes/blue-suede/best-blue-suede-shoes/”

- Pay Attention to URL Length: Keep your URL length at a minimum. Google will truncate URLs that are too long, and that can impact your click-through rate.

- Avoid Dynamic URL Strings: Whenever possible, try to have a static URL with keywords versus a dynamic URL that contains symbols and numbers. They aren’t descriptive and will not help your listing’s click-through rate. A dynamic URL will look something like this: “/cat/?p=3487”

Title Tags

A title tag is the HTML code that forms the clickable headline that can be found in the search engine result pages (SERPs). The title tag is extremely important to the click-through rate of your search listing. It is the first thing that a search user will read about your page and you only have a brief few seconds to capture their attention and convince them that they will find what they are seeking if they click on your listing.

The following are some quick tips for writing title tags:

- Title tags should contain keywords relevant to the contents of the web page.

- You should place important keywords near the beginning of the title.

- Try to keep titles between 50-60 characters in length. Google actually is looking for about 600 pixels, which 60 characters will fit within roughly 90% of the time. Beyond the 600 pixels, Google will display a truncated title, and your title might not have the impact that you intended.

- Whenever possible, use adjectives to enhance the title. Example: The Complete Guide to SEO.

- Don’t stuff your title with keywords. It will look spammy, and users will be less likely to click on your listing.

- Don’t have web pages with duplicate titles. Each page needs to accurately describe its own unique content.

- If you have room, try to incorporate your brand name. This can be a great way to generate some brand recognition. If you already have a strong brand, this may even improve the click-through rate of your search listing.

Meta Description Tags

A meta description tag is the HTML code that describes the content of a web page to a search user. The description can be found in the search engine result pages (SERPs), underneath the title and URL of a search listing. The description, while not a ranking signal itself, plays an important role with SEO. If you provide a relevant, well-written, enticing description; the click-through rate of the search listing will most likely be higher. This can lead to a greater share of traffic and a potential improvement in search rankings, as click-through rate is a search ranking factor.

The following are some quick tips for writing description tags:

- Use keywords, but don’t be spammy with them. Keywords within a search query will be bolded in the description.

- The rule of thumb used to be to have the length of a description to be no longer than 155 characters. Google is now displaying, in many instances, up to 320 characters. This is great from a search listing real estate perspective, and you should try to utilize as many of those 320 characters as possible. When doing so, make sure you get a clear message across in the first 155 characters just in case it gets truncated for a query.

- Accurately describe the content of the web page. If people are misled, your bounce rate will be higher and will potentially harm the position of your listing.

Meta Keywords Tags

The meta keywords tag is HTML code that was designed to allow you to provide guidance to the search engines as to the specific keywords the web page is relevant for. In 2009, both Google and Yahoo announced that they hadn’t used the tag for quite some time, and in 2014, Bing acknowledged the same. As a best practice, most SEOs don’t use the tag at all anymore.

Header Tags

A header tag is HTML code that notes the level of importance of each heading on a web page. You can use 6 heading elements: H1, H2, H3, H4, H5, H6; with H1 being the most significant and H6 the least. Typically the title of an article or the name of a category, product, or service is given the H1 Tag, as they are generally the most prominent and important heading.

Headings used to play an important role in the optimization of a web page, and the search engines would take them into consideration. While they might not carry the same weight they once did, it is still widely believed they offer some ranking value and it is best to include them.

As an SEO best practice, it is best that you utilize only one H1 tag, as this will signify the primary theme of the web page. All other headings should use H2-H6 depending on their level of importance. From an optimization standpoint, it is safe to use multiple H2-H6 tags.

Competitor Research

Conducting a competitor analysis is a strategic function of properly optimizing a website. This can help you identify areas that your business’s website might not be covering. The analysis will help drive the keyword research and content development so that improvements can be made.

The following are some quick tips for conducting competitor research for SEO:

- Architectural Flow: Look at the flow of the content on your competitor’s websites. Analyze how they have it structured architecturally to get deeper into the site. There may be some important takeaways from a website that ranks well, and that may make you decide to plan out your website differently.

- Keyword Gap Analysis: Use a tool like SEMRush or Ahrefs to view the keywords that your competitors are ranking for. Compare that list to what you are ranking for and determine if there are any gaps that you need to cover.

- Backlink Analysis: Similar to a keyword gap analysis, you can also do that for backlinks. Backlinks are extremely important to the algorithms of the search engines, so you might find some easy wins by seeing where your competition has links. There are great tools such as Ahrefs and Majestic that can help you find these backlinks.

Keyword Research

Keyword research is essential to any SEO campaign, and this is one area where you don’t want to cut corners. You need to understand how people search for your specific products, services, or information. Without knowing this, it will be difficult to structure your content to give your website a chance to rank for valuable search queries.

One of the biggest mistakes that a webmaster can make is focusing solely on ranking for one or two keywords. Often, there are some highly competitive, high-volume keywords that you feel you must rank for in order to be successful. Those keywords tend to be one to two words in length, and there is a pretty good chance your competitors are working hard on those very same keywords. Those make for good longer-term goals, and you should still optimize for those, but the real value is in long-tail keywords.

Long-tail keywords make up the vast majority of all search volume. These keywords are more specific, longer phrases that someone will type in, such as “blue 4-inch high heel shoes.” This is much more descriptive than “blue shoes,” even though that word might have a higher search volume. With these keywords being so much more descriptive, these types of customers also tend to be more in-market to transact now.

See Also: 10 Free Keyword Research Tools

SEO Copywriting & Content Optimization

You have probably heard that “content is king” when it comes to SEO. The thing about content is that it drives a lot of factors related to optimization. You need quality content to have a chance at ranking for the keywords that you are targeting, but for you to have success, you also need to have content on your website that webmasters find interesting enough to want to link back to. Not every page can do both. Pages that are sales focused, such as product and service pages, aren’t usually the kind of content that webmasters want to link to. Link generation from content will most likely come from your blog or other informational resources on your website.

The following are some quick tips for content optimization:

- Understand your audience and focus writing your content for them. Don’t force something that won’t resonate. You will risk losing sales and increasing your visitor bounce rate.

- Utilize the keyword research that you have performed. You should naturally work important keyword phrases (that you identified through your research) into your content.

- Be certain the keywords that you are optimizing for are relevant to the overall theme of the page.

- Don’t overdo it with keyword frequency. Important keywords should be in headings with a few mentions throughout the copy. Search engines use latent semantic indexing to process synonyms and the relationship between words, so just focus on writing naturally. If you use the same keywords over and over, it won’t sound natural, and you risk over-optimizing with the search engines.

- Be thorough when writing. Content marketing statistics show that long-form content is nine times more effective than short form.

- Write headlines that will capture the visitor’s attention.

- Try to incorporate images, charts, and videos to make the content more engaging.

- Tell a story and, whenever possible, include a call to action.

See Also: SEO Writing: Creating SEO Friendly Content in 6 Easy Steps

Breadcrumbs

Breadcrumbs can play an important role in helping Google better determine the structure of your website. They often show how content is categorized within a website and also provides built in internal linking to category pages within your website. When placing breadcrumbs, make sure to use structured data as Google may also choose to include these breadcrumb links within your SERP listing.

See Also: Bread Crumbs & SEO: What are They and How They’re Important for SEO

Internal Linking

To understand the importance of internal link building, you need to understand the concept of “link juice.” Link juice is the authority or equity passed from one web page or website to another. So with each link, internal or external, there is a certain amount of value that is passed.

When you write content for your website, you need to take into consideration whether there are any other relevant web pages on your site that would be appropriate to link to from that content. This promotes the flow of “link juice,” but it also provides users the benefit of links to other relevant information on your website.

Off-Page SEO

Off-Page SEO (also known as Off-Site SEO) refers to optimization strategies that are enacted outside of your website that may influence the rankings within the search engine results pages.

A large portion of Google’s algorithm is focused on how the outside world (the web) views the trustworthiness of your website. You may have the best-looking website with the most robust content, but if you don’t put in the work to get noticed, odds are good that your website won’t rank very well.

Since Google’s algorithm is non-human, it relies heavily on outside signals such as links from other websites, article/press mentions, social media mentions, and reviews to help determine the value of the information on your website.

Importance of Link Building

While it would be difficult to rank without quality content, you won’t have any chance on competitive keyword phrases without links. It is still universally accepted that the number and quality of inbound links to a website and/or web page is the number one influencer of search rankings.

With link building as the top search signal, you must have a solid strategy to attract and acquire links for your website. That being said, you should not even start this process without having a solid foundation built with content and design. Acquiring links is much easier when you have attractive, valuable resources that other websites will want to link to.

Link Quality

It is important to know that not all links are created equal. Google’s algorithm looks closely at the trustworthiness of the linking website. For example, a link for the New York Times, which has millions of links and tens of thousands of reputable and unique websites linking to it, will carry much more weight than a link from your friend’s free WordPress site he built a couple of weeks ago.

There are tools that estimate the authority of a website. Two popular tools are Moz’s Open Site Explorer, which calculates a Domain Authority score, and Ahref’s Domain Rank. These tools are great for determining what links are worth acquiring. The higher the score/rank, the more worthwhile it would be to spend time to acquire that link.

The number of links isn’t the only thing that the search engines look at from a quality perspective. They will also take into consideration how relevant the linking website/content is to your own site. If you are selling shoes, for example, a link from a fashion blog will carry more weight than a link from Joe’s Pizza Shack. It may seem crazy to even try to get a link from a pizza place for a shoe website, but in the early days, search engines focused more on the quantity of links. As webmasters caught on, some would try to acquire every link they could find to influence search results. To ensure the quality of results, the search engines had to focus on quality to account for this potential spam.

The Quantity of Links for a Website/Web Page

Even though the greater focus is on the quality and relevance of links, quantity still has a place. You want to continue to grow the number of authoritative links pointing to your website and its important web pages so that you have the ability to challenge your competition on highly competitive keywords.

Another aspect to the quantity discussion is that it is better to have 100 total links from 100 different websites as opposed to having 100 links coming from 10 websites. There is a strong correlation that the more links your website has from unique domains, the better chance you have for ranking improvement.

Of course, once you obtain top rankings, you can’t take a break. If you aren’t growing your link profile, you can trust that your competitors are, and they will eventually surpass you. For this reason, SEO has become a necessary ongoing marketing function for most organizations.

Link Anchor Text

Anchor text is the actual highlighted hyperlink text on a web page that directs a user to a different web page upon clicking. How websites link to you does make a difference in the search engine algorithms, and anchor text is part of that equation. The search engines look to anchor text as a signal for a web page’s relevance for specific search phrases.

Example: Getting a link with the anchor text of “Blue Shoes” on a fashion blog, would indicate to the search engines that your web page must be relevant to “blue shoes.”

In the past, the more links you had pointing to your web pages that contain specific keywords, the more likely you would rank for that keyword. There is still some truth to that, but if you have a high percentage of your links containing keywords, the search engines will suspect you are trying to manipulate the results.

A natural link profile generally contains a varied mix of anchor text, usually dominated by linking text that includes a website’s brand name.

Link Placement

Another factor that the search engines consider is the location of where the link is placed. This plays a role with how much weight or “link juice” will be passed to your own web page from the linking website. Footer and sidebar links are not given as much weight as they once were because of the abuse related to websites selling links in the past.

Links within the body or content of the web page generally designate greater importance to the topic being discussed and have more weight given to them. It is also widely accepted that the higher the link is within the content, the greater the weight and authority that is passed.

Do-Follow vs. No-Follow Links

A do-follow link tells the search engines that the website is essentially vouching for the web page that they are linking to and that search engines should follow that link and pass the appropriate link juice accordingly. A no-follow link has HTML code containing rel=”nofollow” to tell the search engines not to follow the link to the destination and pass any authority or credit.

User-generated content such as comments, wikis, social bookmarks, forum posts, and even some articles have been abused by SEOs to obtain easy links for websites. No-follow links have become a very necessary part of search algorithms because of this user-generated content. Websites started placing the rel=”nofollow” tags to signify that they weren’t vouching for the links. This has led to a significant reduction in spam that was being generated on those websites, as the value of obtaining a link was no longer there.

Do-follow links are significantly more valuable because of the link juice and authority that is passed, but no-follow links are very natural to have in a link profile and can still offer value from the referral traffic that they may provide.

Earned Links vs. Buying

Purchasing links for the purpose of building the authority of your website is against Google’s guidelines and would put your website at risk of receiving a penalty. All purchased links for advertising purposes should be given a no-follow designation to keep your website out of harm’s way.

Earned links are what the search engines actually want to give credit to. For a link to be “earned,” there has to be a clear and compelling reason for one website to want to link to another. Being cited in articles or as a resource on a list are all good examples, assuming that there is a clear and relevant connection between your content and the linking website. Going back to the shoe website example, a link from a fashion blog, within the article content and to your web page, that details the top shoes trends is one that has a clear connection. If your shoe trends page had a link from a pizza shop within their footer, that is something that would not give any appearance of being natural. There would be no clear reason that they would link to that page, as the page is not relevant to their business, audience, or content.

Most reputable SEO companies offer link building services that create linkable assets or content to conduct outreach on your website’s behalf in order to obtain these earned links. You can also do this yourself by building well-thought-out resources or content and contacting websites that you believe would find your resources link-worthy.

Social Media and SEO

Even though many SEOs thought that social media was a ranking factor in Google’s algorithm, in 2014 Google’s Matt Cutts declared that it is not. In 2016, Gary Illyes, a Google Webmaster Trends Analyst, also confirmed that social media is not a ranking factor.

While social media might not be a direct contributor as a ranking factor, the following are a few ways social media can help improve SEO:

- Link Acquisition: If you produce great content and people are sharing it through social channels, webmasters and bloggers might see that content and decide to link back to it on their actual websites. The more your content is shared, the greater your chances of naturally generating links will be.

- Branded Searches: As your content, products, or services are discussed more frequently via social media, people will begin to seek you out on the search engines by typing in your brand name. If you offer a product or service, those search queries may contain your brand name plus the name of a product or service, like “[Brand Name] blue shoes,” for example. As Google or another search engine sees that you are relevant for a type of product or service based on actual customer queries, you may find yourself in a better position for the unbranded version of the query.

- Positive Business Signals: Many businesses have a social media presence. Having an active profile can show that you are actually making an effort to engage your customers. The search engines look at numerous pieces of data to determine the legitimacy of a business or website, and socials signals could potentially be seen as a validator.

SEO Success Metrics

Every business is different, and the same can be said for their specific goals. SEO success can be measured in a variety of different ways. A plumber may want more phone calls, a retailer may want to sell more product, a magazine publisher may want to simply increase page views, and another business may just want to rank higher than their competitor.

The following are some common metrics for gauging the success of an SEO optimization campaign:

- Leads/Sales: Most businesses are looking to receive a return on their investment regardless of the type of marketing medium. SEO marketing is no different in that regard. Ultimately, the best gauge of success is if the efforts lead to an increase in sales and leads. If sales are the goal, the focus of the campaign should revolve around search phrases that would attract in-market consumers versus keywords that are more inquisitive in nature. Keywords that are question-based may deliver traffic, but they typically have very low conversion rates. That should be part of a larger brand strategy after your bases are covered with your money keywords.

- Organic Search Traffic: If your organic traffic is increasing, that is a good sign that your SEO campaign is working. It shows that you are moving up in the search rankings for your chosen keywords and that users are clicking on your search listings. If you know that you are ranking well for keywords that have decent search volume, but you aren’t receiving much in terms of traffic, you may want to work on improving your Title Tag to generate a higher click-through rate. Within Google Search Console, you have the ability to view keywords and their corresponding click-through rate with your search listing.

- Keyword Rankings: Many businesses look at keywords as an indicator of how a search campaign is performing. This can also be a misleading indicator if it isn’t viewed in the right context. If you are solely focused on the performance of a few “high volume” keywords, you could be feeling discouraged even though, through the eyes of a more seasoned SEO expert, you may be making great progress. Highly competitive keywords will take time, and sometimes a significant amount of time to achieve first page rankings. What you should be looking at is whether positive progress is being made on those terms, to the point you begin to see a path of obtaining your desired results over time.

- Keyword Diversity: It is easy to look at the high volume, competitive keywords and feel that you need to rank for those to be successful. Those are great longer-term goals, but the fact remains: 70% of all search traffic is going to long-tail keywords. Tools like SEMRush and Ahrefs monitor millions of keywords and can show you how many keywords your site or web page is ranking for. If you see this number continue to increase, that is another good indicator that your campaign is going in the right direction.

See Also: Best Tools to Monitor Keyword Search Rankings

Creating a Winning SEO Strategy

Most businesses today know the basic concepts around SEO. You can do a lot of individual SEO functions well, but success comes with how you put the pieces together to form a cohesive strategy. That strategy will create the foundation for organic traffic growth that will propel a business for years to come.

You need to be prepared to spend a significant amount of time mapping out the strategy because it is much better and more effective to do it right the first time. The following are some of the key components found in nearly every successful SEO strategy:

- Identify Your Goals: For a successful campaign, you first need to have a clear idea of what you are trying to accomplish. That will drive the strategy moving forward, as everything that you do will be to achieve that goal. For example, if your goal is to generate leads or sales, the structure of your website and the content written should be focused on sending customers into your sales funnel.

- Focus on Topics: Topics should dictate the design and flow of the website and its content. Knowing your products and services, make a list of high-level topics that will form the content pillars of your website. Typically, these topics are going to be the shorter-tail, higher-volume, and more competitive keywords.

- Do the Research: After you settle on the topics that properly describe your products and services, you need to perform research to find the longer-tail keywords that customers would search for to find information about those topics. This type of keyword often answers a wide range of questions.

- Create the Content: When creating the pillar content, be as thorough as possible. The trends show that search engines have been placing a higher value on long-form content. From there, you create the supportive content based off long-tail research performed on the topics. The pillar content should link to the supportive content, and the supportive content should link back to the pillar. This will help formulate a semantic relationship that search engines love.

- Promote: It isn’t enough to create great content. Most businesses don’t have a large audience that is following their blog closely. So if only a few are reading the content, you won’t be getting many links into it without some form of outreach. Link building is essential to a successful SEO campaign. If you have a piece of content that is a valuable resource, you should contact like-minded websites you feel would find that resource to be valuable as well. Give them the reason why you feel their readers or customers will see value in it, and they may just decide to provide a link back. Another way to promote is through social media. The more people that share your content, the more likely someone will post a link on their website to that content. If you don’t have a large following, you may want to spend a little on social media advertising to boost your social presence.

- Make the Time: The hardest thing to do is make time. SEO is not a “set it and forget it” marketing activity. You need to dedicate time each week to achieving your goals. Once you achieve them, make new goals. If you don’t put in the effort, you will find your competition passing you by. If you don’t have the time, you should consider hiring a company that offers SEO services.

See Also: Ecommerce SEO Best Practices Guide

See Also: Beginner’s Guide to Amazon SEO

See Also: Local SEO for Multi-Location Businesses

When Should I Expect to See Results?

A standard rule of thumb is that you should start to see results within 3 to 6 months of starting an SEO campaign. That does not, however, mean that you will be at the top for all of your keywords.

The following are several factors that contribute to how quickly you will see success with your SEO campaign:

- Your Initial Starting Point: Every website can achieve results, but you need to set reasonable expectations. Every website is unique. Each site will have different content, architecture, and link profiles. A website that was created a few months ago will react very differently compared to one that has years of history behind it.

- Keyword Competition: Not all keywords are created equal. Typically, higher-volume keywords also have the highest level of competition. If you aren’t currently ranking on the first three pages for those competitive head terms, you should expect that it will take a fair amount of time (sometimes longer than a year) to really make headway. Longer-tail keywords tend to have lower levels of competition, but provide upwards of 70% of all organic search traffic. Depending on how established your website and brand is, most websites will reap the biggest benefits on long-tail keywords early on.

- SEO Strategy: Forming a solid SEO strategy is key to how your website will perform in the search results. The strategy sets the direction for all campaign activities. If executed properly, you should begin to see SEO success building throughout the campaign.

- Campaign Investment: The old saying “you get back what you put into it” definitely applies to SEO. That is true for both time and money. If you are not dedicating time to search engine optimization activities, you aren’t going to see the results. The same can be said if you are looking for an SEO company to manage the campaign. If you aren’t willing to invest in SEO and are looking for a cheap option, the provider won’t be able to dedicate much time and effort to deliver the results you are looking for. With that being said, some investment is better than no investment. You will have to just adjust your expectations accordingly.

Search Engine Algorithm Updates

Algorithm updates are changes to the evaluation of ranking signals and the way they are applied to the search index to deliver relevant results for search queries. Google makes roughly 500 algorithm updates per year. Of those 500 updates, typically a few would be deemed significant with the vast majority considered to be minor adjustments. Until recently, the major updates were provided names, either by Google or the SEO community. Now, major updates are usually rolled out within the main core algorithm, making it more difficult to determine the true nature of the changes that have been made.

The following are some of the more well-known algorithm updates over the years:

- Panda: Presenting high-quality content to search users has long been a focus for Google. The Google Panda update was created to reduce the number of low-quality, thin-content pages displayed in their results while rewarding higher quality pages that offer unique, compelling content.

- Penguin: The amount of links pointing to a website plays a very significant role in how well a site will perform in SERPs. With it being such an important ranking factor, link amount was an easy target to be abused by unethical SEOs. To combat link webspam, Google Penguin was released. The purpose of the update is to filter out the spammy, manipulative links while rewarding links that are natural and relevant.

- Hummingbird: The Google Hummingbird update was a major overhaul of the core algorithm and how Google responded to user queries. This update was focused on providing relevant results based on identifying the user’s intent using Google’s Knowledge Graph and semantic search.

- Pigeon: The Google Pigeon update was focused around improving localized search capabilities. Google strengthened the ranking factors related to proximity and distance of a business to improve results for search queries that have localized intent. For example, if a search user types in “plumber,” the intent is to see plumbers in their particular area, not a list of companies around the country.

- RankBrain: Google’s algorithm uses artificial intelligence to interpret search queries and deliver results that best match the user’s intent, even though the results may not display content that matches the exact query that was searched. Google continues to use this machine learning to evolve its results as the algorithm learns more about user intent related to queries.

- E-A-T/Medic Update: Google rolled out a core algorithm update in August of 2018 that was early on coined the “Medic Update”, but is also commonly referred to at the E-A-T update as the primary component focuses around expertise, authoritativeness, and trustworthiness. Health and YMYL (Your Money Your Life) related content that wasn’t clearly written by a reputable author (Doctor, Financial Advisor, etc.), and/or sites that had very little or a negative online reputation seemed to impacted the most.

Search Engine Penalties

There are two varieties of search engine penalties that a website can receive: manual or algorithmic.

Manual penalties are a result of a Google employee manually reviewing a website and finding optimization tactics that fall outside of Google’s Webmaster Guidelines. With a manual penalty, the business will receive a manual penalty notice within Google Search Console.

Algorithmic penalties are a result of Google’s algorithm finding tactics that they believe are not consistent with compliance of Google’s Webmaster Guidelines. There is no notice that is typically provided with these types of penalties. The most common way a webmaster would determine if they had an issue is by matching updates of traffic/ranking losses with known Google algorithm updates. As Google has integrated key updates (such as Penguin) within their core algorithm, it has become much more difficult to pinpoint the exact nature of an update. In those instances, there is a heavy reliance on chatter within the SEO community to see if there are common traits shared by sites that have been impacted.

The two most common penalties revolve around two of the more important aspects of Google’s algorithm: content and links.

With Google Panda, sites with very thin or duplicate content see significant drops in rankings, traffic, and indexed pages.

Websites that have a spammy link profile can receive an “unnatural links” penalty within webmaster tools, or be caught within Google’s Penguin algorithm. Sites hit by these have an extremely difficult time ranking well for keywords until they begin the penalty recovery process and the offending links have been removed or disavowed.

White Hat vs. Black Hat

There are two schools of thought when it comes to SEO. One has to do with long-term growth with long-term rewards (White Hat), and the other is to grow as fast as possible while reaping the rewards until you are finally caught breaking the rules (Black Hat).

Ethical SEOs employ what are considered White Hat SEO tactics. These SEOs are focused on creating positive experiences with quality content, while building natural links to the website. These tactics stand the test of time and usually experience more positive gains than negative when a Google Algorithm change occurs. These tactics build a solid foundation for sustainable growth in organic traffic.

Black Hat SEOs don’t mind violating Google’s guidelines. They are typically focused on improving search rankings quickly to profit off of the organic traffic increase while it lasts. They will use tactics such as purchasing links, creating doorway pages, cloaking, and using spun content. Google eventually detects these tactics and will penalize these websites accordingly, and once they do, Black Hat SEOs usually move on to their next short-term conquest. This is not a tactic that real businesses would want to use, as it can be very detrimental to the future of your online presence and reputation.

What is the Future of Search Engine Optimization?

The future of SEO will revolve around voice search. This is truly the next frontier that the search engines are working through, and SEOs will need to be ready.

When it comes to nationally competitive keywords, obtaining the feature snippet or top ranking for keywords will be very important. You will need to work on making sure that you have content that will answer the questions of search users.

For Local SEO, maintaining consistency in name, address, and phone (NAP) data across the phone directories will be important, but you will need to work on obtaining reviews to standout from the crowd.

See Also: How Popular is Voice Search?

SEO Consultants

If your company has made the determination that you will need to hire a digital marketing agency, either for a lack of available time or expertise internally, the evaluation process is very important.

The following are some key areas that you will want to evaluate when choosing an SEO firm:

- Consultation: You don’t want an agency that runs their business like it’s on autopilot. If they aren’t actively trying to learn about your business or goals, you should eliminate them from consideration. Once you get to the presentation stage, you shouldn’t accept them just emailing over the proposal either. You should be engaged and listen to what is being proposed and any SEO advice that is being offered.

- Plan of Action: When selecting any vendor, you need to believe in what they are going to do for your company. As part of the proposal process, the prospective SEO provider should provide you with a detailed plan of action. This plan should encompass all areas of SEO including on-page, off-page, and how success will be measured. A timeline of SEO activities is also something that most reputable firms will provide.

- Quality over Quantity: Some SEO vendors will promise a very large number of links and other deliverables each month. That may be realistic if you are paying several thousands of dollars monthly, but if it sounds too good to be true, it probably is. Quality work takes time and usually doesn’t come in large quantities.

- Case Studies: Some people say that you should get references, but odds are pretty good that the provider has cherry-picked a list of clients that will have nothing but glowing remarks. Case studies offer much greater value as you can read about real clients, their problems, and how the prospective company produced results for them.

- Expertise: When it comes to SEO, it is usually better to choose a company that offers it as their primary service. Search optimization is continuously evolving and it is better to be with a company that is in the trenches every day, aware of the latest SEO trends and research. You will want to partner with a company that is plugged in. Also, ask about their recognitions and involvement within the SEO community.

- Success Metrics: Work with a company that talks about metrics other than just rankings. If they are talking about generating sales and leads, they probably aren’t the right fit. They can get you ranking for keywords, but keywords that lead to conversions are where it matters most.

- Cost: Real search engine optimization takes time and effort. If you are being presented with $199 per month options, you are probably looking in the wrong place for vendors. You don’t have to mortgage everything to purchase SEO services, but you need to have enough of a budget to have actual work performed.

SEO Careers

Search engine optimization is an important skill in the fast-growing digital marketing industry which, according to Forrester Research, will grow to $120 Billion in marketing spend by the year 2021.

If you are considering a career as an SEO specialist, the following are some traits that would serve you well in this field:

- Enjoy Changing Environments: Even though the core principles of SEO haven’t changed much over the years, algorithms do get updated. Aside from algorithms, each client presents their own unique challenges.

- Love to Research: Half of the fun of being an SEO professional is the research. Test-and-learn environments can present exciting new discoveries.

- Great Organizational Skills: To be successful, you need to be able to formulate strategies and execute them.

- The Passion to Win: If you like the feeling that you get when you win, just imagine ranking number one for a highly competitive keyword!

See Also: SEO Certifications: Are They Beneficial & Do They Make you an Expert?

See Also: SEO Courses: The Best Free and Paid Training Options

See Also: Digital Marketing Career Guide: How to Get Started

Like almost any other profession, you need to be equipped with the right tools in order to succeed. The following is a list of popular search engine optimization tools that are used by industry professionals.

- Moz SEO Products: Moz is one of the best-known SEO product providers. Moz Pro has tools for keyword research, link building, website auditing, and more. They also have a product called Moz Local that helps with local listing management.

- Ahrefs: Ahrefs offers a wide range of tools such as a keyword explorer, a content explorer, rank tracking, and a website auditor. Their most popular feature is their backlink analysis tool, which assigns ratings to how powerful each domain and specific URL is based on the quality of links pointing into them. They are always coming out with new features.

- SEMrush: SEMrush offers organic and paid traffic analytics. You can type in any domain or web page and see what keywords are ranking. They also offer a variety of other features such as a site auditor and a content topic research tool. This is another service known for frequent product updates and new features that has become an invaluable resource for many SEOs.

- Majestic: Majestic offers a tool that allows you to analyze the backlink profile of any website or web page. They have crawled and indexed millions of URLs, categorizing each page based on topic. They have also assigned Trust Flow and Citation Flow ratings to each URL to represent, based on their analysis, how trustworthy and influential a web page or domain may be.

- Screaming Frog: Screaming Frog offers a popular desktop application tool that will crawl websites, detecting potential technical and on-page issues. The software is available for PC, Mac, and Linux.

- DeepCrawl: DeepCrawl is a website spidering tool that will analyze the architecture, content, and backlinks of a website. You can set the tool to monitor your website on a regular basis so that you are aware of issues as they arise.

- LinkResearchTools: Well-known for their backink auditing tool, LinkResearchTools has helped many SEOs identify high-risk links within a backlink profile. They also offer tools to identify link building outreach targets.

- BuzzSumo: This platform gives you the ability to analyze content for any topic or competitor. You can identify content that has been performing at a high level by inbound links and social mentions. BuzzSumo also allows you to find key influencers that you can reach out to for promoting your own content.

- Google Analytics: By far the most popular web visitor analytics tool, it allows you to analyze data from multiple channels in one location to provide a deeper understanding about user experience.

- Google Search Console: This is an essential tool that helps a webmaster understand what Google is seeing in regard to their website. You can see web indexing status, crawl errors, and even the keywords that you are ranking for. They will also send you messages if your website has received a manual penalty.

- Bing Webmaster Tools: Bing’s equivalent to Google Search Console, you can run a variety of diagnostic and performance reports. You also can receive alerts and notifications regarding your website’s performance

- Google PageSpeed Insights: PageSpeed Insights provides real-world data on the performance of a web page across desktop and mobile devices. A speed score is provided, along with optimization tips, to improve performance. You can lose website visitors if pages take too long to load, making this tool very useful.

- Structured Data Testing Tool: This tool allows you to paste code or fetch a URL to verify proper coding of the structured data.

- Mobile-Friendly Testing Tool: This tool provides an easy test to check if a website is mobile friendly. If there are page loading or other technical issues, it will provide additional details.

Helpful Resources

Knowledge is power with SEO, as it is with most industries. You need to stay aware of the latest news, research, and trends so that you are able to adapt accordingly. The following are some educational resources that provide a wealth of knowledge on the subject of search engine optimization:

- HigherVisibility’s Insite Blog: The Insite blog is a great resource for business owners and marketers to learn more about various aspects of digital marketing. There is a heavy focus on search engine optimization, but paid search and social media are also covered well.

- HigherVisibility’s Resource Center: The resource center provides a collection of digital marketing best practices, research studies, and guides.

- The Moz Blog: The Moz Blog provides insights from some of the search industry’s greatest minds. The blog provides advice, research, and how-tos.

- Search Engine Land: One of the industry’s most popular blogs, Search Engine Land provides breaking news, research, white papers, and webinars on almost anything related to digital marketing.

- Search Engine Watch: Search Engine Watch is a top industry blog covering topics such as search engine optimization, PPC, social media, and development.

- Search Engine Roundtable: Search Engine Roundtable was founded by industry veteran Barry Schwartz, covering breaking news and recapping events in the world of search.

- Search Engine Journal: SEJ is a popular blog founded by Loren Baker that provides the latest news in the search marketing industry.

- Google Webmaster Central Blog: This blog provides official news on Google’s search index straight from the Google Webmaster Central team.

SEO Conferences & Events

If you want to learn from the greatest minds in the industry, attending conferences and events is an excellent way to do it. The following are some of the most popular conferences that have a strong focus on search marketing:

- MozCon: MozCon is a conference that provides tactical sessions focused on SEO and other topics related to digital marketing. There is always a quality lineup of top industry veterans leading the discussions.

- SMX (Search Marketing Expo): SMX is produced by Third Door Media, the company behind Search Engine Land and Marketing Land. This is one of the leading events for search marketing professionals, offering a wealth of knowledge with various sessions and training workshops.

- SearchLove Conference: Bringing together some of digital marketing’s leading minds, the SearchLove Conference helps to get marketers up to date on the latest trends in online marketing.

- PubCon: PubCon is a leading internet marketing conference and tradeshow that provides cutting-edge, advanced educational content to marketers.

The post What is SEO? The Beginner’s Guide to Search Engine Optimization appeared first on HigherVisibility.

The post

https://www.highervisibility.com/blog/what-is-seo/ appeared first on

https://www.highervisibility.com

Millennial buyers want better content from B2B marketers Better B2B content is a top concern among Millennial buyers, as the demographic accounts for some 33 percent of overall B2B buyers, a portion Forrester's newly-released report expects to grow to 44 percent by 2025. Digital Commerce 360 LinkedIn Launches New 'Featured' Section on Profiles to Highlight Key Achievements and Links LinkedIn (client) began a gradual roll-out of a new "Featured" section, where users' key achievements will appear near the top of profiles when starred from updates, the Microsoft-owned platform recently announced. Social Media Today How the Fastest-Growing US Companies Are Using Social Media 87 percent of Inc. 500 firms used LinkedIn for social media during 2019, topping a list of how the fastest-growing U.S. firms are using social media, outlined in a recently-released UMass Dartmouth report of interest to digital marketers. MarketingProfs The Best Times to Post on Social Media According to Research [Infographic] B2B businesses find that the best posting times on LinkedIn are before noon and around 6:00 p.m., one of numerous social media platform most effective posting time statistics outlined in a recently-released infographic. Social Media Today Gen Z Craves Multifaceted Content, Audio - And Even Likes (Relevant) Long-Form Ads Digital media consumption habits vary by generation, with members of the Gen Z demographic more often seeking out multifaceted content comprised of interactive elements such as polls and quizzes, according to recently-released content consumption preference data. MediaPost Are Brands Getting Smarter About Social? New Data Reveals Surprising Trends Across Platforms 2019 saw U.S. brands receiving an average of five percent more social engagement that during 2018, with video engagement achieving an even higher eight percent growth rate — two of numerous statistics of interest to online marketers contained in recent social media activity study data. Forbes

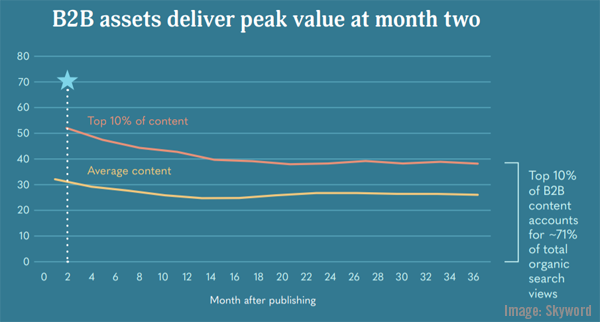

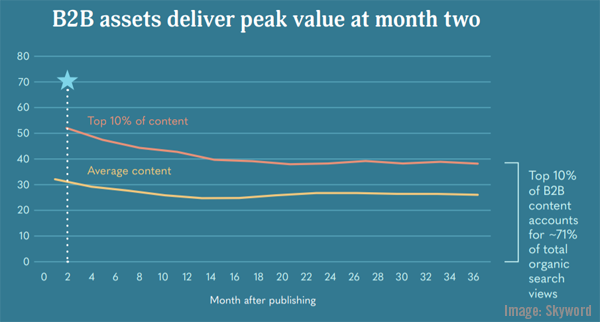

Millennial buyers want better content from B2B marketers Better B2B content is a top concern among Millennial buyers, as the demographic accounts for some 33 percent of overall B2B buyers, a portion Forrester's newly-released report expects to grow to 44 percent by 2025. Digital Commerce 360 LinkedIn Launches New 'Featured' Section on Profiles to Highlight Key Achievements and Links LinkedIn (client) began a gradual roll-out of a new "Featured" section, where users' key achievements will appear near the top of profiles when starred from updates, the Microsoft-owned platform recently announced. Social Media Today How the Fastest-Growing US Companies Are Using Social Media 87 percent of Inc. 500 firms used LinkedIn for social media during 2019, topping a list of how the fastest-growing U.S. firms are using social media, outlined in a recently-released UMass Dartmouth report of interest to digital marketers. MarketingProfs The Best Times to Post on Social Media According to Research [Infographic] B2B businesses find that the best posting times on LinkedIn are before noon and around 6:00 p.m., one of numerous social media platform most effective posting time statistics outlined in a recently-released infographic. Social Media Today Gen Z Craves Multifaceted Content, Audio - And Even Likes (Relevant) Long-Form Ads Digital media consumption habits vary by generation, with members of the Gen Z demographic more often seeking out multifaceted content comprised of interactive elements such as polls and quizzes, according to recently-released content consumption preference data. MediaPost Are Brands Getting Smarter About Social? New Data Reveals Surprising Trends Across Platforms 2019 saw U.S. brands receiving an average of five percent more social engagement that during 2018, with video engagement achieving an even higher eight percent growth rate — two of numerous statistics of interest to online marketers contained in recent social media activity study data. Forbes  How Businesses Handle Customer Reviews [Infographic] Over 35 percent of businesses often or always use positive reviews in their marketing efforts, with Google, Facebook, and Yelp being the three platforms most often monitored for online reviews, according to recently-released survey data focusing on how reviews are used by businesses. Social Media Today IAB: Programmatic Now 85% Of All U.S. Digital Advertising By 2021 programmatic advertising spending will exceed $91 billion in the U.S. alone, and account for 86 percent of overall digital ad spend — two of several items of interest to digital marketers in newly-released Interactive Advertising Bureau report data. MediaPost Facebook Tests New Format for Separate Facebook Stories Discovery Page Facebook has continued to ramp up its support for content shared in the Stories format, announcing recently that certain Stories will receive larger images in a test of a distinct new Facebook Stories discovery page, according to the social media giant. Social Media Today From Consistent Publishing to Performance Peaks: What You Need to Know About the Life Span of Content Digital B2B content assets often bring peak value two months after publishing, while going on to achieve steady endurance among consumers, two of many findings of interest to digital marketers contained in new B2B content lifespan report data. Skyword ON THE LIGHTER SIDE:

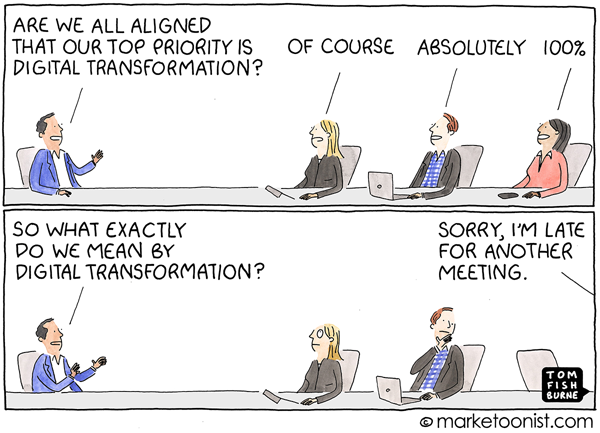

How Businesses Handle Customer Reviews [Infographic] Over 35 percent of businesses often or always use positive reviews in their marketing efforts, with Google, Facebook, and Yelp being the three platforms most often monitored for online reviews, according to recently-released survey data focusing on how reviews are used by businesses. Social Media Today IAB: Programmatic Now 85% Of All U.S. Digital Advertising By 2021 programmatic advertising spending will exceed $91 billion in the U.S. alone, and account for 86 percent of overall digital ad spend — two of several items of interest to digital marketers in newly-released Interactive Advertising Bureau report data. MediaPost Facebook Tests New Format for Separate Facebook Stories Discovery Page Facebook has continued to ramp up its support for content shared in the Stories format, announcing recently that certain Stories will receive larger images in a test of a distinct new Facebook Stories discovery page, according to the social media giant. Social Media Today From Consistent Publishing to Performance Peaks: What You Need to Know About the Life Span of Content Digital B2B content assets often bring peak value two months after publishing, while going on to achieve steady endurance among consumers, two of many findings of interest to digital marketers contained in new B2B content lifespan report data. Skyword ON THE LIGHTER SIDE:  A lighthearted look at what is digital transformation? by Marketoonist Tom Fishburne — Marketoonist Jif Partnered With Giphy to Make a Limited-Edition Peanut Butter No One Can Pronounce — Adweek TOPRANK MARKETING & CLIENTS IN THE NEWS:

A lighthearted look at what is digital transformation? by Marketoonist Tom Fishburne — Marketoonist Jif Partnered With Giphy to Make a Limited-Edition Peanut Butter No One Can Pronounce — Adweek TOPRANK MARKETING & CLIENTS IN THE NEWS: