When doing a link building strategy – or any other type of SEO-related strategy, for that matter – you might find the sheer amount of data that we have available to us at a relatively low cost, quite staggering.

However, that can sometimes create a problem in itself; selecting the best data to use, and then using it in such a way that makes it useful and actionable, can be a lot more difficult.

Creating a link building strategy and getting it right is essential – links are within the top two ranking signals for Google.

This may seem obvious, but to ensure you are building a link strategy that is going to benefit from this major ranking signal and be used on an ongoing basis, you need to do more than just provide metrics on your competitors. You should be providing opportunities for link building and PR that will guide you on the sites you need to acquire to provide the most benefit.

To us at Zazzle Media, the best way to do this is by performing a large-scale competitor link intersect.

Rather than just showing you how to implement a large scale link intersect, which has been done many times before, I’m going to explain a smarter way of doing one by mimicking well-known search algorithms.

Doing your link intersect this way will always return better results. Below are the two algorithms we will be trying to replicate, along with a short description of both.

Topic-sensitive PageRank

Topic-sensitive PageRank is an evolved version of the PageRank algorithm that passes an additional signal on top of the traditional authority and trustworthy scores. This additional signal is topical relevance.

The basis of this algorithm is that seed pages are grouped by the topic to which they belong. For example, the sports section of the BBC website would be categorised as being about sport, the politics section about politics, and so on.

All external links from those parts of the site would then pass on sport or politics-related topical PageRank to the linked site. This topical score would then be passed around the web via external links just like the traditional PageRank algorithm would do authority.

You can read more about topic-sensitive PageRank in this paper by Taher Haveliwala. Not long after writing it he went onto become a Google software engineer. Matt Cutts also mentioned Topical PageRank in this video here.

Hub and Authority Pages

The idea of the internet being full of hub (or expert) and authority pages has been around for quite a while, and Google will be using some form of this algorithm.

You can see this topic being written about in this paper on the Hilltop algorithm by Krishna Bharat or Authoritative Sources in a Hyperlinked Environment by Jon M. Kleinberg.

In the first paper by Krishna Bharat, an expert page is defined as a ‘page that is about a certain topic and has links to many non-affiliated pages on that topic’.

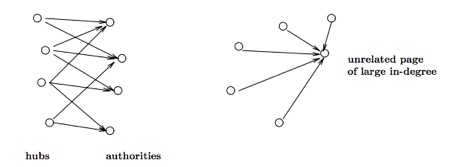

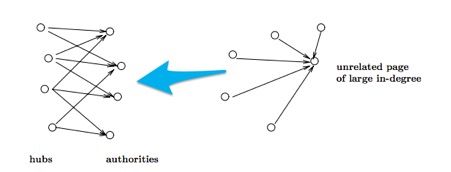

A page is defined as an authority if ‘some of the best experts on the query topic point to it’. Here is a diagram from the Kleinberg paper that shows a diagram of hubs and authorities and then also unrelated pages linking to a site:

We will be replicating the above diagram with backlink data later on!

From this paper we can gather that to become an authority and rank well for a particular term or topic, we should be looking for links from these expert/hub pages.

We need to do this as these sites are used to decide who is an authority and should be ranking well for a given term. Rather than replicating the above algorithm at the page level, we will instead be doing it at the domain level. Simply because hub domains are more likely to produce hub pages.

Relevancy as a Link Signal

You probably noticed both of the above algorithms are aiming to do very similar things with passing authority depending on the relevance of the source page.

You will find similar aims in other link based search algorithms including phrase-based indexing. This algorithm is slightly beyond the scope of this blog post, but if we get links from the hub sites we should also be ticking the box to benefit from phrase-based indexing.

If anything, reading about these algorithms should be influencing you to build relationships with topically relevant authority sites to improve rankings. Continue below to find out exactly how to find these sites.

1 – Picking your target topic/keywords

Before we find the hub/expert pages we are aiming to get links from, we first need to determine which pages are our authorities.

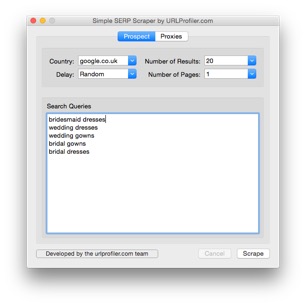

This is easy to do as Google tells us which site it deems an authority within a topic in its search results. We just need to scrape search results for the top sites ranking for related keywords that you want to improve rankings for. In this example we have chosen the following keywords:

- bridesmaid dresses

- wedding dresses

- wedding gowns

- bridal gowns

- bridal dresses

For scraping search results we use our own in-house tool, but the Simple SERP Scraper by the creators of URL Profiler will also work. I recommend scraping the top 20 results for each term.

2 – Finding your authorities

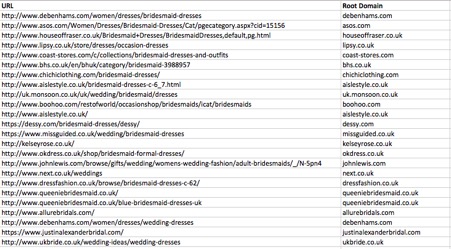

You should now have a list of URLs ranking for our target terms in Excel. Delete some columns so that you have just the URL that is ranking. Now, in column B, add a header called ‘Root Domain’. In cell B2 add the following formula:

=IF(ISERROR(FIND(“//www.”,A2)), MID(A2,FIND(“:”,A2,4)+3,FIND(“/”,A2,9)-FIND(“:”,A2,4)-3), MID(A2,FIND(“:”,A2,4)+7,FIND(“/”,A2,9)-FIND(“:”,A2,4)-7))

Expand the results downwards so that you have the root domain for every URL. Your spreadsheet should now look like this:

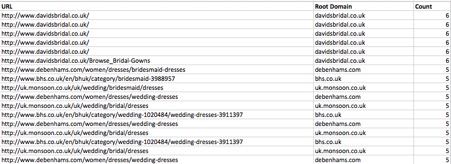

Next add another heading in column C called ‘Count’ and in C2 add and drag down the following formula:

=COUNTIF(B:B,B2)

This will just count how many times that domain is showing up for the keywords we scraped. Next we need to copy column C and paste as a value to remove the formula. Then just sort the table using column C from largest to smallest. Your spreadsheet should now look like this:

Now we just need to remove the duplicate domains. We do this by going into the ‘Data’ tab in the ribbon at the top of Excel and selecting remove duplicates. Then just remove duplicates on column B. We can also delete column A so we just have our root domains and the number of times the domain is found within the search results.

We now have the domains that Google believes to be an authority on the wedding dresses topic.

3 – Export referring domains for authority sites

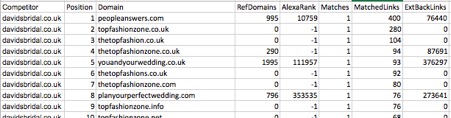

We usually use Majestic to get the referring domains for the authority sites – mainly because they have an extensive database of links. Plus, you get their metrics such as Citation Flow, Trust Flow as well as Topical Trust Flow (more on Topical Trust Flow later).

If you want to use Ahrefs or another service, you could use a metric that they provide that is similar to Trust Flow. You will, however, miss out on Topical Trust Flow. We’ll make use of this later on.

Pick the top domains with a high count from the spreadsheet we have just created. Then enter them into Majestic and export the referring domains. Once we have exported the first domain, we need to insert an empty column in column A. Give this column a header called ‘Competitor’ and then input the root domain in A2 and drag down.

Repeat this process and move onto the next competitor, except this time copy and paste the new export into the first sheet we exported (excluding the headers) so we have all the backlink data in one sheet.

I recommend repeating this until you have at least ten competitors in the sheet.

4 – Tidy up the spreadsheet

Now that we have all the referring domains we need, we can clean up our spreadsheet and remove unnecessary columns.

I usually delete all columns except the competitor, domain, TrustFlow, CitationFlow, Topical Trust Flow Topic 0 and Topical Trust Flow Value 0 columns.

I also rename the domain header to be ‘URL’, and tidy up the Topical Trust Flow headers.

![]()

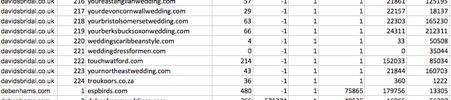

5 – Count repeat domains and mark already acquired links

Now that we have the data, we need to use the same formula as earlier to highlight the hub/expert domains that are linking to multiple topically relevant domains.

Add a header called ‘Times Linked to Competitors’ in column G and add this formula in G2:

=COUNTIF(B:B,B2)

This will now tell you how many competitors the site in column B is linking to. You will also want to mark domains that are already linking to your site so we are not building links on the same domain multiple times.

To do this, firstly add a heading in column H called ‘Already Linking to Site?’. Next, create a new sheet in your spreadsheet called ‘My Site Links’ and export all your referring domains from Majestic for your site’s domain. Then paste the export into the newly created sheet.

Now, in cell H2 in our first sheet, add the following formula:

=IFERROR(IF(MATCH(B2,’My Site Links’!B:B,0),”Yes”,),”No”)

This checks if the URL in cell B2 is in column B of the ‘My Site Links’ links sheet and returns yes or no depending on the result. Now copy columns G and H and paste them as values, just to remove the formulas again.

In this example, I have added ellisbridals.co.uk as our site.

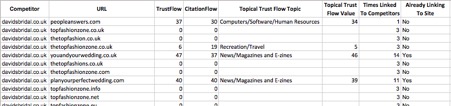

6 – Organic traffic estimate (optional)

This step is entirely optional but at this point usually I will also pull in some metrics from SEMrush.

I like to use SEMrush’s Organic Traffic metric to give a further indication of how well a website is ranking for its target keywords. If it is not ranking very well or has a low organic traffic score, this a pretty good indication the site has either been penalised, de-indexed or is just low quality.

Move onto step 8 if you do not want to do this.

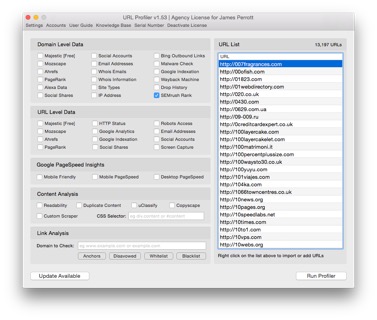

To get this information from SEMrush, you can use URL Profiler. Just save your spreadsheet as a CSV, right click in the URL list area in URL Profiler then import CSV and merge data.

Next, you need to tick ‘SEMrush Rank’ in the domain level data, input your API key, then run the profiler.

If you are running this on a large set of data and want to speed up collecting the SEMrush metrics, I will sometimes remove domains with a Trust Flow score of 0 – 5 in Excel before importing. This is just to eliminate the bulk of the low-quality untrustworthy domains that you do not want to be building links from. It also saves some SEMrush API credits!

7 – Clean-up URL profiler output (still optional)

Now we have the new spreadsheet that includes SEMrush metrics, you just need to clear up the output in the combined results sheet.

I will usually remove all columns that have been added by URL Profiler and just leave the new SEMrush Organic Traffic.

8 – Visualise the data using a network graph

I usually do steps 8, 9, 10, so the data is more presentable rather than having the end product as just a spreadsheet. It also makes filtering the data to find your ideal metrics easier.

These are very quick and easy to create using Google Fusion Tables, so I recommend doing them.

To create them save your spreadsheet as a CSV file and then go to create a new file in Google Drive and select the ‘Connect More Apps’ option. Search for ‘Fusion Tables’ and then connect the app to your Drive account.

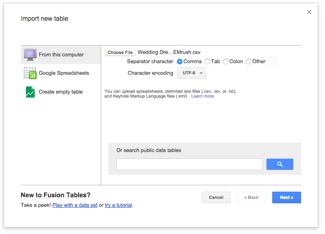

Once that is done, create a new file in Google Drive and select Google Fusion Tables. We then just need to upload our CSV file from our computer and select next in the bottom right.

After the CSV has been loaded, you will have to import the table by clicking next again. Give your table a name and select finish.

9 – Create a hub/expert network graph

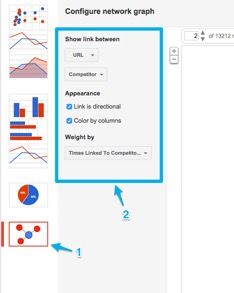

Now the rows are imported we need to create our network graph by clicking on the red + icon and then ‘add chart’.

Next, choose the network graph at the bottom and configure the graph with the following settings:

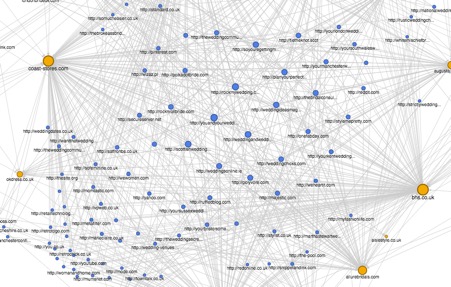

Your graph should now look something like below. You may need to increase the number of nodes it shows so more sites begin to show up. Be mindful that the more nodes there are, the more demanding it is on your computer. You will also just need to select ‘done’ in the top right corner of the chart so we are no longer configuring it.

If you have not already figured it out, the yellow circles on the chart are our competitors; the blue circles are the sites linking to our competitors. The bigger the competitors circle, the more referring domains they have. The linking site circles get bigger depending on how much of a hub domain it is. This is because, when setting up the chart, we weighted it by the number of times linking to our competitors.

While the above graph looks pretty great, we have quite a lot of sites in it that fit the ‘unrelated page of large in-degree’ categorisation mentioned in the Kleinberg paper earlier as they only link to one authority site.

We want to be turning the diagram on the right into the diagram on the left:

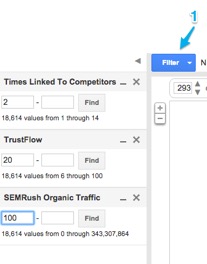

This is really simple to do by adding the the below filters.

Filtering so only sites who link to more than one competitor will show only hub/expert domains; filtering by TrustFlow and SEMrush Organic Traffic removes lower quality untrustworthy domains.

You will need to play around with the Trust Flow and SEMrush Organic Traffic metrics depending on the sites you are trying to target. Our chart has now gone down to 422 domains from 13,212.

If you want to, at this point you can also add another filter to only show sites that aren’t already linking to your site. Here is what our chart now looks like:

The above chart is now a lot more manageable. You can see our top hub/expert domains we want to be building relationships with floating around the middle of the chart. Here is a close-up of some of those domains:

Your PR/Outreach team should now have plenty to be getting on with! You can see the results are pretty good, with heaps of wedding related sites that you should start building relationships with.

10 – Filter to show pages that will pass high topical PageRank

Before we get into creating this graph, I am first going to explain why we can substitute Topical Trust Flow by Majestic for Topic-sensitive PageRank.

Topical Trust Flow works in a very similar way to Topic-sensitive PageRank in that it is calculated via a manual review of a set of seed sites. Then this topical data is propagated throughout the entire web to give a Topical Trust Flow Score for every page and domain on the internet.

This gives you a good idea of what topic an individual site is an authority on. In this case, if a site has a high Topical Trust Flow score for a wedding related subject, we want some of that wedding related authority to be passed onto us via a link.

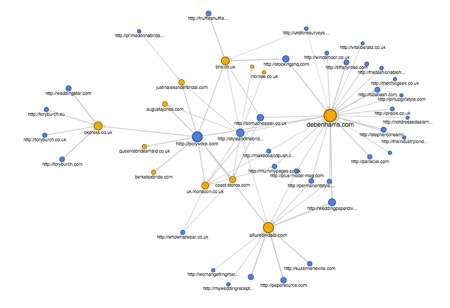

Now, onto creating the graph. Since we know how these graphs work, doing this should be a lot quicker.

Create another network graph just as before, except this time weight it by Topical Trust Flow value. Create a filter for Topical Trust Flow Topic and pick any topics related to your site.

For this site, I have chosen Shopping/Weddings and Shopping/Clothing. I usually also use a similar filter to the previous chart for Trust Flow and organic traffic to prevent showing any low-quality results.

Fewer results are returned for this chart, but if you want more authority passed within a topic, these are the sites you want to be building relationships with.

You can play around with the different topics depending on the sites you want to try and find. For example, you may want to find sites within the News/Magazines and E-Zines topic for PR.

11 – Replicate Filters in Google Sheets

This step is very simple and does not need much explanation.

I import the spreadsheet we created earlier into Google Sheets and then just duplicate the sheet and add the same filters as the ones I created in the network graphs. I usually also add an ‘Outreached?’ header so the team knows if we have an existing relationship with the site.

I recommend doing this, as while these charts look great and visualise your data in a fancy way, it helps with tracking which sites you have already spoken to.

Summary

You should now know what you need to be doing for your site or client to not just drive more link equity into their site, but also drive topical relevant link equity that will benefit them the most.

While this seems to be a long process, they do not take that long to create – especially when you compare the benefit the site will receive from them.

There are much more uses for Network Graphs. I occasionally use them for visualising internal links on a site to find gaps in their internal linking strategy.

I would love to hear any other ideas you may have to make use of these graphs, as well as any other things you like to do when creating a link building strategy.

Sam Underwood is a Search and Data Executive at Zazzle and a contributor to Search Engine Watch.

The article Imitating search algorithms for a successful link building strategy was first seen from https://searchenginewatch.com

No comments:

Post a Comment