This week saw the publication of Mary Meeker’s annual Internet Trends report, packed full of data and insights into the development of the internet and digital technology across the globe.

Particularly of interest to us here at Search Engine Watch is a 21-page section on the evolution of voice and natural language as a computing interface, titled ‘Re-Imagining Voice = A New Paradigm in Human-Computer Interaction’.

It looks at trends in recognition accuracy, voice assistants, voice search and sales of devices like the Amazon Echo to build up an accurate picture of how voice interface has progressed over the past few years, and is likely to progress in the future.

So what do we learn from the report and Meeker’s data about the role of voice in internet trends for 2016?

Voice search is growing exponentially

We know that voice is a fast-rising trend in search, as the proliferation of digital assistants and the advances in interpreting natural language queries make voice searching easier and more accurate.

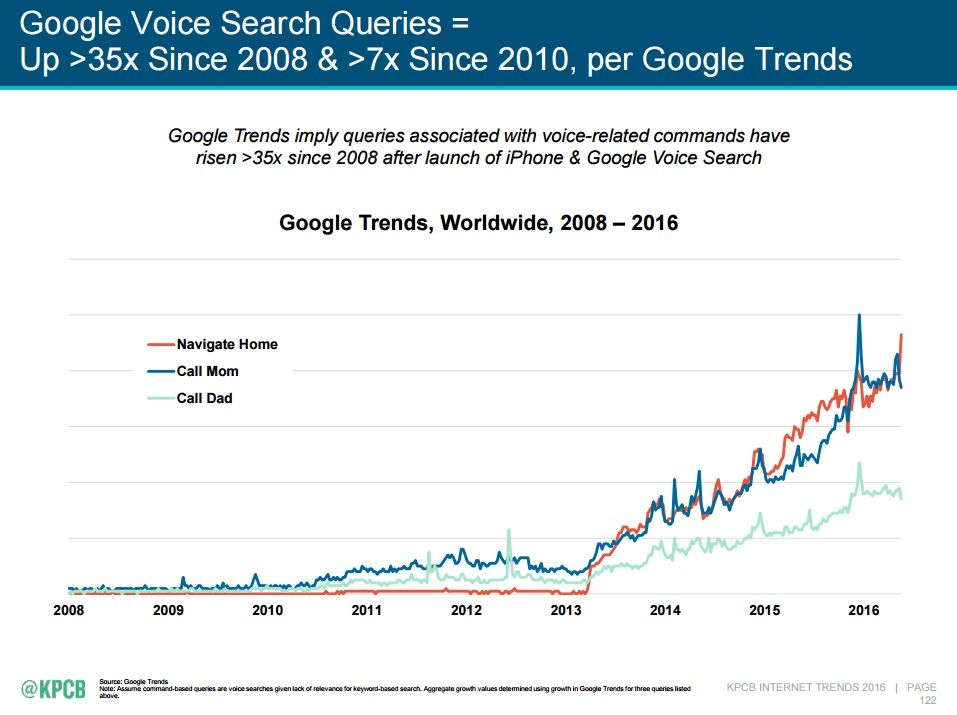

But the figures from Meeker’s report show exactly to what extent voice search has grown over the past eight years, since the launch of the iPhone and Google Voice Search in 2008. Google voice queries have risen more than 35-fold from 2008 to today, according to Google Trends, with “call mom” and “navigate home” being two of the most commonly-used voice commands.

Tracking the rise of voice-specific queries such as “call mom”, “call dad” and “navigate home” are an unexpected but surprisingly accurate way to map the growth of voice search and voice commands. As an aside, anyone can track this data for themselves by entering the same terms into Google Trends. It’s interesting to think what the signature voice commands might be for tracking the use of smart home hubs like Amazon Echo in a few years’ time.

Google is, of course, by no means the only search engine experiencing this trend, and the report goes on to illustrate the rise in speech recognition and text to speech usage for the Chinese search engine Baidu. Meeker notes that “typing Chinese on a small cellphone keyboard [is] even more difficult than typing English”, leading to “rapidly growing” usage of voice input across Baidu’s products.

Meeker also plots a timeline of key milestones in the growth of voice search since 2014, noting that 10% of Baidu search queries were made by voice in September 2014, that Amazon Echo was the fastest-selling speaker in 2015, and that Andrew Ng, Chief Scientist at Baidu, has predicted that by 2020 50% of all searches will be made with either images or speech.

While developments in image search haven’t been making as much of a splash as developments with voice, it shouldn’t be overlooked, as the technology that will let us ‘search’ objects in the physical world is coming on in leaps and bounds. In April, Bing implemented an update to its iOS app allowing users to search the web with photos from their phone camera, although the feature is limited to users in the United States, as they’re the only ones who can download the app.

The visual search app CamFind, which has been around since 2013, also has an uncanny ability to identify objects in the physical world and call up product listings, which has a huge amount of potential for both search and marketing.

Why do people use voice?

The increase in voice search and voice commands is not only due to improved technology; the most advanced technology in the world still wouldn’t see widespread adoption if it wasn’t useful. So what are voice input adopters (at least in the United States) using it to do?

The most common setting for using voice input is the home, which explains the popularity of voice-controlled smart home hubs like Amazon Echo. In second place is the car, which tallies up with the most popular motivation for using voice input: “Useful when hands/vision occupied”.

30% of respondents found voice input faster than using text, which also makes sense – Meeker observes elsewhere in the report that humans can speak almost 4 times as quickly as they can type, at an average of 150 words per minute (spoken) versus 40 words per minute (typed). While this has always been the case, the ability of technology to accurately parse those words and quickly deliver a response is what is really beginning to make voice input faster and more convenient than text.

As Andrew Ng said, in a quote that is reproduced on page 117 of the report, “No one wants to wait 10 seconds for a response. Accuracy, followed by latency, are the two key metrics for a production speech system…”

The third-most popular reason for using voice input, “Difficulty typing on certain devices”, is a reminder of the important role that voice has always played, and continues to play, in making technology more accessible. The least popular setting for using voice input is at work, which could be due to the difficulty in picking out an individual user’s voice in a work environment, or due to a social reluctance to talk to a device in front of colleagues.

Meeker’s report also looks into the usage of one digital assistant in particular: Hound, an assistant app developed by the audio recognition company SoundHound, and which was also recently used to add voice search capabilities to SoundHound’s music search engine of the same name.

What’s interesting about the usage breakdown for Hound, at least among the four fairly broad categories that the report divides it into, is that no one use type dominates overwhelmingly. The most popular use for Hound is ‘general information’, at 30%, above even ‘personal assistant’ (which is what Hound was designed to do) at 27%.

Put together with the percentage of queries for ‘local information’, more than half of voice queries to Hound are information queries, suggesting that many users still see voice primarily as a gateway into search. It would be interesting to see similar graphs for usage of Siri, Cortana and Google’s assistants to determine whether this trend is borne out across the board.

A tipping point for voice?

Towards the end of the section, Meeker looks at the evolution and ownership of the Amazon Echo, which as a device which was specifically designed to be used with voice (as opposed to smartphones which had voice capabilities integrated into them) is perhaps the most useful product case study for the adoption of voice commands.

Meeker notes on one slide that computing industry inflection points are “typically only obvious with hindsight”. On the next, she juxtaposes the peak of iPhone sales in 2015 and the beginning of their estimated decline in 2016 with the take-off of Amazon Echo sales in the same period, seeming to suggest that one caused the other, or that one device is giving way to the other for dominance of the smart device market.

I’m not sure if I would agree that the Amazon Echo is taking over from the iPhone (or from smartphones), since they’re fundamentally different devices: one is designed to be home-bound, the other portable; one is visual and the other is not; and as I pointed out above, the Amazon Echo is designed to work exclusively with voice, while the iPhone simply has voice capabilities.

But it is interesting to view the trend as part of a shift in the computing market towards a different type of technology: an ‘always-on’, Internet of Things-connected device specifically designed to work with voice, and perhaps that’s the point that Meeker is making here.

Meeker points to the fast movement of third-party developers to build platforms which integrate the Alexa voice assistant into different devices as evidence of the expansion of “voice as computing interface”. While I think we will always depend on a visual interface for many things, this could be the beginning of a tipping point where voice commands take over from buttons and text as the primary input method for most devices and machines.

Hopefully Meeker will revisit this topic in subsequent trends reports so that we can see how things play out over the next few years.

The article What does Meeker’s Internet Trends report tell us about voice search? was first seen from https://searchenginewatch.com

No comments:

Post a Comment